Approximate Neural Architecture Search via Operation Distribution Learning

Abstract

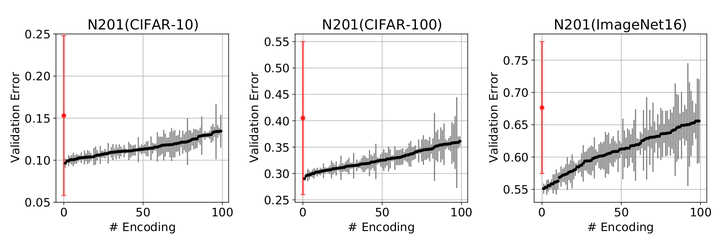

The standard paradigm in neural architecture search (NAS) is to search for a fully deterministic architecture with specific operations and connections. In this work, we instead propose to search for the optimal operation distribution, thus providing a stochastic and approximate solution, which can be used to sample architectures of arbitrary length. We propose and experimentally show, that given an architectural cell, its performance largely depends on the ratio of used operations, rather than any specific connection pattern; that is, small changes in the ordering of the operations are often irrelevant. This intuition is orthogonal to any specific search strategy and can be applied to a diverse set of NAS algorithms. Through extensive experimental evaluation on 4 data-sets and 4 different NAS techniques (Bayesian optimisation, differentiable search, local search and random search), we show that the operation distribution (1) holds enough discriminating power to reliably identify a solution and (2) is significantly easier to optimise than traditional encodings, leading to large speed-ups at no cost in performance. Indeed, this simple intuition significantly reduces the cost of current approaches, and is a needed step to enable the use of NAS by a broader range of research applications.