Abstract

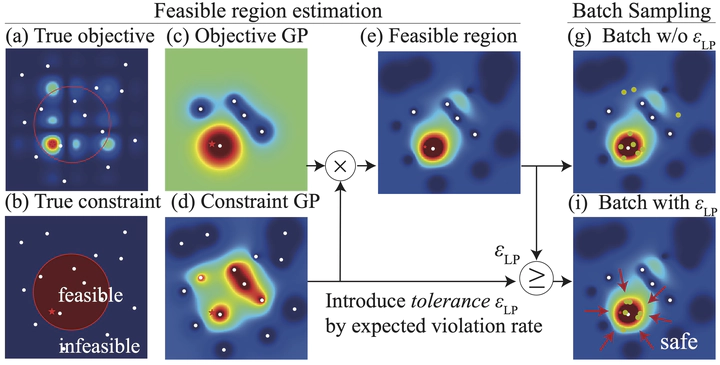

Active learning parallelization is widely used, but typically relies on fixing the batch size throughout experimentation. This fixed approach is inefficient because of a dynamic trade-off between cost and speed—larger batches are more costly, smaller batches lead to slower wall-clock run-times—and the trade-off may change over the run (larger batches are often preferable earlier). To address this trade-off, we propose a novel Probabilistic Numerics framework that adaptively changes batch sizes. We define batch construction as a quantization task—a task of approximating a continuous target distribution (e.g. acquisition function), with a discrete distribution (batch samples). We measure the batch quality through the divergence between these two distributions. Instead of a set batch size, we fix the precision of approximation, allowing dynamic adjustment of batch size and query locations. We also extend this to scenarios with probabilistic constraints, interpreting constraint violations as reductions in the precision requirement, to subsequently adapt batch construction. Through extensive experiments, we demonstrate that our approach significantly enhances learning efficiency and flexibility in diverse Bayesian batch active learning and optimization applications.